Get metrics from Fluentd to:

- Visualize Fluentd performance.

- Correlate the performance of Fluentd with the rest of your applications.

The Fluentd check is included in the Datadog Agent package, so you don't need to install anything else on your Fluentd servers.

In your Fluentd configuration file, add a monitor_agent source:

<source>

@type monitor_agent

bind 0.0.0.0

port 24220

</source>

Follow the instructions below to configure this check for an Agent running on a host. For containerized environments, see the Containerized section.

-

Edit the

fluentd.d/conf.yamlfile, in theconf.d/folder at the root of your Agent's configuration directory to start collecting your Fluentd metrics. See the sample fluentd.d/conf.yaml for all available configuration options.init_config: instances: ## @param monitor_agent_url - string - required ## Monitor Agent URL to connect to. # - monitor_agent_url: http://example.com:24220/api/plugins.json

You can use the Datadog FluentD plugin to forward the logs directly from FluentD to your Datadog account.

Proper metadata (including hostname and source) is the key to unlocking the full potential of your logs in Datadog. By default, the hostname and timestamp fields should be properly remapped via the remapping for reserved attributes.

Add the ddsource attribute with the name of the log integration in your logs in order to trigger the integration automatic setup in Datadog.

Host tags are automatically set on your logs if there is a matching hostname in your infrastructure list. Use the ddtags attribute to add custom tags to your logs:

Setup Example:

# Match events tagged with "datadog.**" and

# send them to Datadog

<match datadog.**>

@type datadog

@id awesome_agent

api_key <your_api_key>

# Optional

include_tag_key true

tag_key 'tag'

# Optional tags

dd_source '<INTEGRATION_NAME>'

dd_tags '<KEY1:VALUE1>,<KEY2:VALUE2>'

<buffer>

@type memory

flush_thread_count 4

flush_interval 3s

chunk_limit_size 5m

chunk_limit_records 500

</buffer>

</match>

By default, the plugin is configured to send logs through HTTPS (port 443) using gzip compression. You can change this behavior by using the following parameters:

use_http: Set this tofalseif you want to use TCP forwarding and update thehostandportaccordingly (default istrue)use_compression: Compression is only available for HTTP. Disable it by setting this tofalse(default istrue)compression_level: Set the compression level from HTTP. The range is from 1 to 9, 9 being the best ratio (default is6)

Additional parameters can be used to change the endpoint used in order to go through a proxy:

host: The proxy endpoint for logs not directly forwarded to Datadog (default value:http-intake.logs.datadoghq.com).port: The proxy port for logs not directly forwarded to Datadog (default value:80).ssl_port: The port used for logs forwarded with a secure TCP/SSL connection to Datadog (default value:443).use_ssl: Instructs the Agent to initialize a secure TCP/SSL connection to Datadog (default value:true).no_ssl_validation: Disables SSL hostname validation (default value:false).

This also can be used to send logs to Datadog EU by setting:

<match datadog.**>

#...

host 'http-intake.logs.datadoghq.eu'

</match>

Datadog tags are critical to be able to jump from one part of the product to another. Having the right metadata associated with your logs is therefore important in jumping from a container view or any container metrics to the most related logs.

If your logs contain any of the following attributes, these attributes are automatically added as Datadog tags on your logs:

kubernetes.container_imagekubernetes.container_namekubernetes.namespace_namekubernetes.pod_namedocker.container_id

While the Datadog Agent collects Docker and Kubernetes metadata automatically, FluentD requires a plugin for this. We recommend using fluent-plugin-kubernetes_metadata_filter to collect this metadata.

Configuration example:

# Collect metadata for logs tagged with "kubernetes.**"

<filter kubernetes.*>

type kubernetes_metadata

</filter>

For containerized environments, see the Autodiscovery Integration Templates for guidance on applying the parameters below.

| Parameter | Value |

|---|---|

<INTEGRATION_NAME> |

fluentd |

<INIT_CONFIG> |

blank or {} |

<INSTANCE_CONFIG> |

{"monitor_agent_url": "http://%%host%%:24220/api/plugins.json"} |

Run the Agent's status subcommand and look for fluentd under the Checks section.

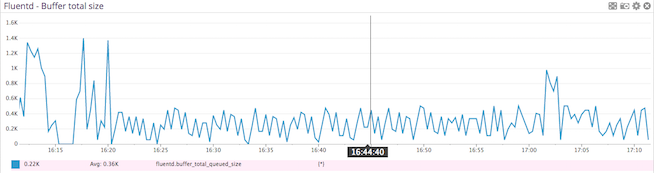

See metadata.csv for a list of metrics provided by this integration.

The FluentD check does not include any events.

fluentd.is_ok:

Returns CRITICAL if the Agent cannot connect to Fluentd to collect metrics, otherwise returns OK.

Need help? Contact Datadog support.